Have you ever stopped to wonder how computers, these seemingly magical boxes of metal, actually work? We interact with them daily, from scrolling through social media to crunching complex data, but the inner workings of these powerful machines often remain a mystery. Let’s unravel this enigma together and embark on a journey to understand the core principles of computer science.

The Heart of the Machine: The CPU and the Language of Binary

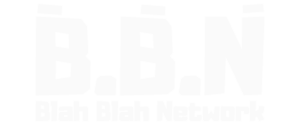

At the core of every computer lies the Central Processing Unit (CPU), a tiny chip made of silicon that serves as the brain of the operation. But how does this piece of silicon manage to control everything your computer does? The answer lies in billions of microscopic switches called transistors.

Think of transistors as tiny light switches that can be either on or off. This simple “on/off” state forms the basis of the binary system, the language computers understand. Each switch represents a single unit of information called a bit, which can be either a 0 (off) or a 1 (on).

By grouping 8 bits together, we create a byte. A single byte can represent 256 different combinations of 0s and 1s, allowing us to store information numerically. This system of counting using only 0s and 1s is called binary.

While computers think in binary, we humans find it a bit clunky. That’s where hexadecimal comes in handy. It’s a more compact way to represent binary, using a combination of numbers (0-9) and letters (A-F) to represent groups of 4 bits. This makes reading and writing code a whole lot easier for us humans.

Logic Gates: Building Blocks of Computation

Now that we can store information, how do we get computers to do something with it? Enter logic gates. These are electronic circuits built using transistors that act like the decision-makers of the computer world. They take in binary inputs (0s and 1s) and, based on their logic, produce a specific binary output.

Imagine a logic gate like a door with two locks (inputs). Only when both locks are unlocked (both inputs are 1) does the door open (output is 1). This is an example of an AND gate. By combining different types of logic gates, we can build complex circuits that perform arithmetic and logical operations, following the rules of Boolean algebra, a system for manipulating logical statements.

From Binary to Human-Readable: Character Encoding and Operating Systems

While computers crunch binary code, we humans prefer letters, numbers, and symbols. That’s where character encoding comes in. Systems like ASCII assign a unique binary code to each character on your keyboard. When you press a key, the keyboard sends the corresponding binary code to the CPU, which then knows exactly which character to display on your screen.

But how does the CPU know what to do with all this information? It relies on an operating system (OS) like Windows, macOS, or Linux. The OS acts like the conductor of an orchestra, managing all the hardware and software components of your computer. It provides a platform for applications to run, manages memory allocation, and handles input and output from various devices.

The CPU’s Workflow: Fetch, Decode, Execute, Store

Think of the CPU as a super-fast but forgetful chef. It can follow instructions to the letter but can only hold a few ingredients (data) in its hands at a time. That’s where Random Access Memory (RAM) comes in.

RAM is like the chef’s countertop, providing temporary storage for data and instructions that the CPU needs to access quickly. The CPU follows a four-step cycle:

- Fetch: The CPU retrieves the next instruction from RAM.

- Decode: The instruction is broken down into parts the CPU understands.

- Execute: The CPU performs the operation specified by the instruction.

- Store: The result of the operation is stored back into RAM.

This cycle repeats billions of times per second, guided by a clock that synchronizes operations. The speed of this clock, measured in gigahertz (GHz), determines how many instructions the CPU can execute per second.

To further boost performance, modern CPUs have multiple cores, each capable of executing instructions independently. Additionally, each core can handle multiple threads, allowing them to switch between tasks rapidly, further enhancing multitasking capabilities.

Talking to Computers: From Machine Code to Programming Languages

In the early days of computing, programmers had to write instructions directly in machine code, the raw binary language understood by CPUs. This was incredibly tedious and error-prone. Thankfully, programming languages emerged as a more human-friendly way to interact with computers.

Programming languages use abstraction to simplify complex tasks. Instead of writing long strings of 0s and 1s, you can use words and symbols that resemble human language. This code is then translated into machine code that the CPU can execute.

There are two main types of programming languages:

- Interpreted languages like Python execute code line by line, making them great for rapid prototyping and scripting.

- Compiled languages like C and Go translate the entire program into machine code beforehand, resulting in faster execution speeds.

The Programmer’s Toolkit: Variables, Data Types, and Data Structures

Regardless of the language, programmers rely on a set of fundamental tools:

- Variables: Think of variables as containers for storing information. They have names and can hold different types of data.

- Data Types: Data types define the kind of information a variable can hold, such as numbers, text, or true/false values. Common data types include:

- Integers: Whole numbers (e.g., 5, -10).

- Floating-Point Numbers: Numbers with decimal points (e.g., 3.14, -2.5).

- Characters: Single letters, numbers, or symbols (e.g., ‘A’, ‘5’, ‘$’).

- Strings: Sequences of characters (e.g., “Hello, world!”).

- Data Structures: These are ways to organize and store collections of data efficiently. Some common data structures include:

- Arrays: Ordered lists of elements, accessible by their numerical index.

- Linked Lists: Chains of elements, each pointing to the next element in the sequence.

- Hash Maps: Collections of key-value pairs, allowing for efficient retrieval of values based on their associated keys.

Algorithms: Step-by-Step Problem Solving

At its core, programming is about solving problems. Algorithms are the recipes we use to guide computers towards solutions. An algorithm is a set of well-defined steps that take some input, process it, and produce a desired output.

For example, a simple algorithm for finding the largest number in a list might involve:

- Starting with the first number as the largest.

- Comparing it to the next number in the list.

- If the next number is larger, update the largest number.

- Repeating steps 2-3 for all numbers in the list.

Algorithms can be expressed in various ways, including flowcharts, pseudocode, and of course, code in a specific programming language.

Functions: Reusable Blocks of Code

To avoid writing the same code over and over again, programmers use functions. Functions are self-contained blocks of code that perform specific tasks. They can take input values (arguments) and produce output values (return values).

For instance, you could write a function called calculate_average that takes a list of numbers as input and returns their average. This function can then be reused whenever you need to calculate averages, saving you time and effort.

Recursion: When Functions Call Themselves

In some cases, a problem can be broken down into smaller, self-similar subproblems. This is where recursion comes in handy. Recursion is a powerful technique where a function calls itself within its own definition.

Imagine you need to calculate the factorial of a number (e.g., 5! = 5 * 4 * 3 * 2 * 1). A recursive function to calculate this might look like this:

- If the input number is 1, return 1 (base case).

- Otherwise, return the input number multiplied by the factorial of the input number minus 1 (recursive step).

While elegant, recursion can be computationally expensive if not used carefully. Techniques like memoization can help optimize recursive functions by storing previously calculated results to avoid redundant computations.

Evaluating Algorithm Efficiency: Time and Space Complexity

Not all algorithms are created equal. Some are faster and more memory-efficient than others. Time complexity and space complexity are measures used to analyze the efficiency of algorithms.

- Time complexity describes how the runtime of an algorithm increases as the input size grows.

- Space complexity describes how the memory usage of an algorithm increases as the input size grows.

These complexities are expressed using Big O notation, which provides a high-level understanding of an algorithm’s scalability. For instance, an algorithm with O(n) complexity means its runtime increases linearly with the input size (n).

Programming Paradigms: Different Approaches to Problem Solving

Just like there are multiple ways to bake a cake, there are various approaches to writing code. These approaches are known as programming paradigms. Some popular paradigms include:

- Imperative Programming: This paradigm focuses on explicitly telling the computer how to do something, step by step.

- Declarative Programming: This paradigm focuses on describing what you want to achieve, leaving the implementation details to the programming language or underlying system.

- Object-Oriented Programming (OOP): This paradigm revolves around the concept of objects, which encapsulate data and behavior. OOP promotes code reusability and modularity.

Machine Learning: Teaching Computers to Learn

What happens when we encounter problems that are difficult to explicitly program solutions for? Think image recognition, natural language processing, or predicting future trends. This is where machine learning comes into play.

Machine learning is a subfield of artificial intelligence that focuses on enabling computers to learn from data without being explicitly programmed for every scenario. Instead of writing specific rules, we provide algorithms with massive datasets and let them learn patterns and make predictions.

Connecting the World: The Internet and the World Wide Web

The internet, a vast network of interconnected computers, has revolutionized the way we communicate and access information. At its core, the internet relies on a set of protocols that govern how data is transmitted between devices.

- IP Addresses: Every device on the internet has a unique Internet Protocol (IP) address that identifies it on the network.

- TCP/IP: The Transmission Control Protocol/Internet Protocol (TCP/IP) suite defines how data is broken down into packets, sent across the network, and reassembled at the destination.

The World Wide Web (WWW) is built on top of the internet and provides a way to access and share information using web browsers. Key components of the web include:

- URLs: Uniform Resource Locators (URLs) are the addresses we use to access web pages.

- HTTP: The Hypertext Transfer Protocol (HTTP) is used by web browsers to communicate with web servers, requesting and receiving web pages and other resources.

- HTML, CSS, and JavaScript: These languages are used to create and style web pages and add interactive functionality.

Databases: Organizing and Managing Information

As we generate and consume ever-increasing amounts of data, efficient storage and retrieval become crucial. Databases provide structured ways to organize and manage information.

- Relational databases are the most common type, organizing data into tables with rows (records) and columns (fields).

- SQL (Structured Query Language) is a powerful language used to interact with relational databases, allowing users to insert, retrieve, update, and delete data.

Conclusion

From the tiny transistors in our smartphones to the vast networks that connect us globally, computer science underpins countless aspects of our modern world. Understanding the fundamental principles of this ever-evolving field empowers us to not only navigate the digital landscape but also to shape the future of technology itself.